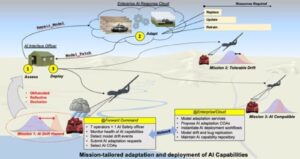

The U.S. Air Force Research Laboratory's (AFRL) Rome, N.Y., branch is seeking innovative ideas from small businesses on artificial intelligence (AI) and "next generation distributed command and control" to aid the Air Force in "contested environments." An AFRL business notice laid out an umbrella contract worth $99 million through 2028. The plan budgets $40.9 million in awards for fiscal 2024 and 2025. "Much of the DoD’s AI is currently designed and built by data scientists in pristine 'lab-like' environments with low-stress…